Artificial Intelligence, Energy and the Economy

Author

Media Request

For general and media inquiries and to book our experts, please contact: pr@rstreet.org

Table of Contents

Introduction

The release of ChatGPT on Nov. 30, 2022, sparked a global conversation about the future of computing. By January 2023, this large language model had 100 million users—a number that rose to 173 million by April 2023. Created and released by the company OpenAI, ChatGPT gave the broader public its first direct access to the computational power of artificial intelligence (AI) and its ability to provide human-like text generation with highly accurate contextual understanding.

These advances in AI and machine learning from ChatGPT and other AI models like Google’s Bard, Meta’s LLaMA and other open-source models could fundamentally alter the future of computing and improve our quality of life in countless ways, such as enhancing scientific learning, managing complex systems, and improving the productivity and output of virtually all sectors of the economy.

Despite these potential benefits, important ethical questions and societal concerns have been raised about some applications of AI, including possible misuse or malevolent use as well as privacy and cybersecurity risks. Critics also fear job losses, as many routine tasks could be automated with AI, although the overall potential impact of substituting AI-assisted capital for labor remains ambiguous because the use of these technologies and the resultant expanding marketplace is expected to generate new employment opportunities in new fields. These potential adverse consequences have caused many to call for regulation.

Additionally, transitioning to an AI economy raises concerns about energy consumption and environmental impacts, as the data centers that house the hardware and other resources required for AI technologies use a considerable amount of power. Estimates suggest that the centers consume 2 to 3 percent of U.S. and global power. Given this concern, AI energy consumption and its resultant carbon footprint require an appropriate policy response that broadly evaluates the holistic impacts of AI, considering both the direct power used by AI applications as well as their ability to improve energy efficiency and lower carbon emissions in key sectors of the economy.

In this paper, we explore these issues by briefly explaining the accelerated computing behind AI models and the key benefits the technology offers. We then discuss two of the bigger concerns surrounding AI and machine learning—energy consumption and regulation—and offer suggestions that policymakers can consider to ensure that the technologies are able to flourish safely and responsibly.

Understanding AI, Accelerated Computing and Energy Needs

Much of the consternation over the existential threats posed by AI’s ability to mimic human rationality is misplaced, given that current AI models are generative. Generative AI is not the same as artificial general intelligence where machines think and learn like humans; rather, generative AI models create predictive content based on the datasets on which they are trained. ChatGPT, for example, relies on a generative AI model— trained on a large dataset (175 billion parameters originally and 1.76 trillion in its latest

iteration)—to predict patterns in responses to user queries and produce text-based content.

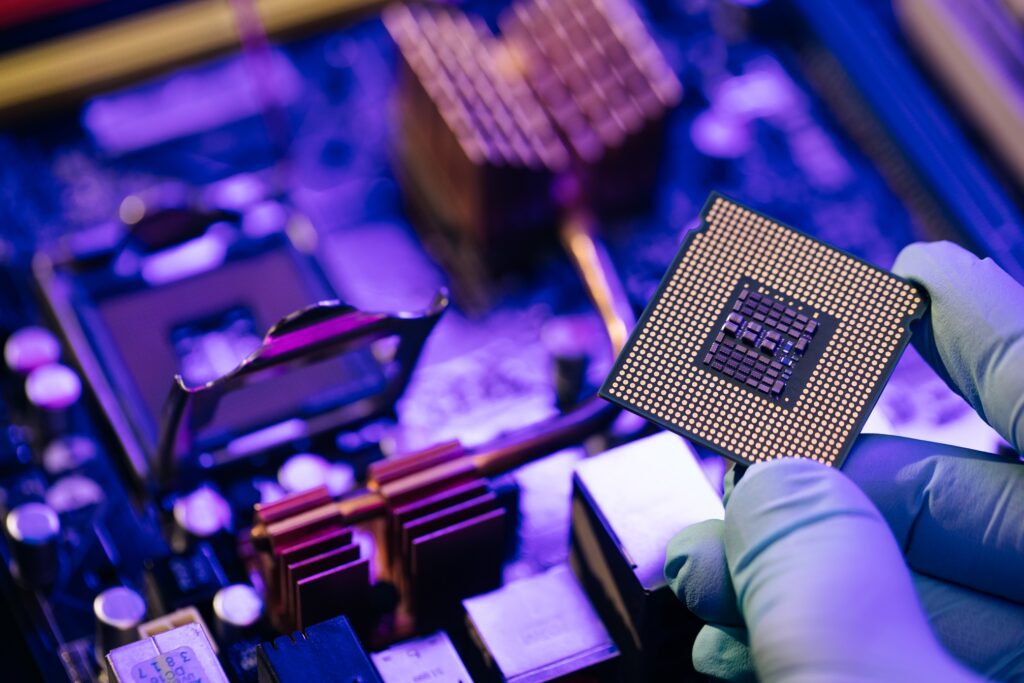

Accelerated computing is at the heart of generative AI, providing the computational power necessary to build and run these complex models. It combines the use of graphics processing units (GPUs) with central processing units (CPUs) and other specialized hardware like tensor processing units (TPUs) to allow large datasets to be processed much more quickly and efficiently than traditional CPUs alone. And while CPUs rely on sequential calculations, GPUs, TPUs and other hardware accelerators have been developed to efficiently perform the parallel processing required to run effective AI.

Importantly, although AI-optimized hardware may lower per-task energy consumption because of its efficiency, the total energy consumption of AI workloads can still be significant because AI models are typically large and run continuously. This is especially true when creating models capable of tasks such as image recognition, natural language processing and predictive analytics. Consider, for example, the real-time data and analysis required to pilot autonomous vehicles, where data from onboard sensors is processed immediately and requires detailed navigation maps, object recognition and car-to-car communication. One estimate suggests that a single autonomous vehicle can generate up to 5,100 terabytes of data annually.

Because of the size and energy requirements of AI models, concerns over energy consumption are factoring into to technological improvements, as energy efficiency is seen as a key factor in the sustainability and cost-effectiveness of AI technologies. Hardware manufacturers have economic incentives to develop products that reduce energy consumption to expand market share over their rivals. Likewise, data centers have incentives to reduce overall power usage with energy-efficient cooling systems and more efficient energy management.

Potential Benefits of an AI Economy

The use of machine learning and AI modeling is increasingly important for the largescale, real-time data processing and analytics that are underlying advances in many

fields. This increased processing power is useful for applications in which immediate responses are needed, such as the use of autonomous vehicles, where decisions must be made in fractions of a second based on large amounts of incoming sensor data. Similarly, traffic management systems must identify and resolve bottlenecks and other problems in real-time, which optimizes traffic management while reducing overall carbon emissions.

AI is also useful when data-intensive research is required, such as in the pharmaceutical sciences where new and personalized drug therapies could be developed at significantly lower costs and a more rapid pace. In fact, the pharmaceutical industry is already applying such technology, and several AI-designed drugs are now moving on to clinical trials. In agriculture, AI can improve crop yields, reduce pesticide use and optimize the supply chain for agricultural products, and in the energy field, it can be utilized to optimize electricity grid management while also making buildings greener and smarter. Table 1 highlights these and other potential benefits offered by AI and machine learning.

| Industry | Beneficial Uses of AI |

| Healthcare | -Improve diagnostics and patient care -Enable personalized treatment programs -Predict disease outbreaks -Optimize hospital operations -Accelerate drug discovery |

| Agriculture | -Optimize crop yields -Predict disease and pest outbreaks -Automate farming tasks -Improve supply chain efficiency. |

| Transportation and Logistics | -Enable autonomous vehicles -Optimize routing and delivery schedules -Improve supply chain efficiency -Enhance safety |

| Energy | -Optimize grid management -Increase the efficiency of renewable energy sources -Reduce energy usage in buildings and industry |

| Manufacturing | -Automate manufacturing tasks -Optimize production schedules -Improve quality control -`Optimize supply chain management |

| Retail | -Personalize customer experiences -Optimize inventory management -Enhance logistics -Enable “smart” marketing |

| Finance/Insurance | -Assess and manage insurance risks -Detect fraud -Optimize investment strategies -Personalize financial services |

| Education | -Personalize learning -Automate grading and feedback -Predict student outcomes -Enable new modes of online learning |

| Environment | -Enhance climate models -Optimize resource use -Monitor and evaluate environmental risks |

| Public Safety and Disaster Response | -Predict and respond to natural disasters -Enhance emergency services -Improve weather forecasting and storm tracking -Optimize resource allocation during crises |

Greening AI

Despite the potential economic and social benefits of AI, one of the notable concerns with its deployment is its carbon footprint and potential impact on energy consumption. Fortunately, industry is adapting as it expands, adjusting and implementing practices to reduce the overall carbon footprint and energy consumption of AI technologies. One area of research focuses on improving the efficiency of the hardware required for AI modeling. As noted previously, data centers house specialized hardware designed specifically for accelerated computing, such as the GPUs and TPUs needed for machine learning and large datasets. As the demand for AI increases, the development of more efficient hardware solutions that consume less energy becomes a market advantage.

For example, NVIDIA is developing a new generation of chips and hardware to more effectively optimize the use of GPUs and other hardware in ways that lower energy consumption and improve the cost-effectiveness of AI and machine learning. While NVIDIA is currently the market leader with respect to GPUs designed for machine learning and data centers, competitors such as AMD and Intel are developing their own GPUs, as are a host of startups. Even Google and Amazon are entering the chip market.

In addition to more efficient hardware, machine-learning algorithms can be optimized to reduce their computational requirements and thereby the energy required to run them. Techniques such as pruning, quantization and knowledge distillation can simplify models and reduce the amount of computation required, leading to energy savings. Likewise, energy-efficient coding practices can reduce the energy needed to run AI applications. For instance, coding that optimizes data structures, avoids unnecessary computations and runs on energy-efficient algorithms can contribute to reduced energy requirements for AI models. Additionally, smaller, compact models require less computation and therefore less energy consumption; identifying where they may be used in place of larger, more complex models while still yielding the appropriate level of accuracy and precision is yet another path to lower AI energy requirements.

It is also possible to alter the location where computing is carried out to minimize energy use. The incorporation of edge computing, which allows local devices to perform some of the necessary computations rather than a data center, can reduce energy costs, particularly those associated with data transmission. Edge computing can also reduce latency, which is especially important when using AI applications that require real-time responses. In effect, edge computing brings the benefits of AI to local uses, such as homes, factories, or smart instruments and appliances that make up the Internet of Things.

Additionally, data centers can lower energy consumption by adopting more efficient power supplies and cooling systems and improving server utilization. Importantly, AI itself can help data centers more effectively manage these factors and identify opportunities to reduce energy inputs. In fact, Google, Meta and Microsoft all rely on AI to improve the functioning of their data centers. Google, for example, used its DeepMind AI to reduce the energy used for cooling its data centers by up to 40 percent. There are also opportunities to incorporate renewable energy more effectively into the operations of data centers. The Citadel Campus in Reno-Tahoe—the largest data center in the world at 7.2 million square feet—is powered by renewable energy.

Finally, it is crucial to remember that beyond data centers, AI also has the potential to contribute significantly to reducing carbon emissions and lowering energy consumption throughout the economy. AI models can optimize energy use in buildings and industry, enhance electricity grid management and facilitate more efficient transportation systems, among other applications.

Thus, energy consumption by AI and machine learning is a concern worth addressing, with the understanding that AI is a new field emerging in a dynamic marketplace, and new technologies, greener coding and more efficient energy management are all already combining to reduce the current energy demand. These practices should be encouraged, and policymakers and regulators should be cognizant of both the evolving nature of the market and the inherent, market-driven incentives that reduce the overall power load of data centers.

Regulate or Innovate?

Given the rapidly evolving nature of AI technology and the concern regarding its high demand for energy, it is understandable that policymakers, technologists and other stakeholders are debating regulation. Yet the increase in the demand for energy must be evaluated within a proper framework, which requires comparing the energy use and outcomes achievable through the computing power of AI to the alternative. That is, we must consider how much energy would be required to generate the same outcomes and increases in productivity and economic growth in the absence of AI and machine learning, if those same gains are even feasible. Such an approach would highlight both the costs and benefits of the increased energy consumption of AI-based technologies

Still, should a market failure be identified, it is essential that any regulatory framework considered be flexible enough to allow stakeholders and other parties to innovate and identify market-driven solutions to reduce energy demands. Overly prescriptive rules would likely become outdated quickly, locking in suboptimal technologies and unnecessarily impeding newer, more efficient approaches for managing energy consumption. Excessive regulation would also hamper the adoption of newer technologies or the deployment of renewable energy resources that could offer significant economic and social gains, including optimizing the power grid to more efficiently generate and deliver electricity.

Regulating this technology would also be challenging because AI is not confined to a specific sector of the economy; it spans a wide variety of sectors such as healthcare, finance, transportation, energy and more—all of which have existing regulators with the authority to intervene in the marketplace. Before creating new regulatory bodies, it would be prudent to identify specific weaknesses, market failures or challenges that cannot be resolved within existing regulatory frameworks. To do so, policymakers will require a deep understanding of the technology, its applications and its implications. Close collaboration between policymakers, AI researchers, industry stakeholders and the public will yield a more robust policy framework for AI technologies.

Conclusion

Rapid advances in AI and machine learning are poised to drive economic growth by automating routine tasks, increasing productivity, enhancing business processes and reducing waste. Although managing the energy needs of AI models is an important issue, high energy costs offer natural incentives to develop technologies and products that increase efficiency and lower the energy costs required to run AIbased technologies. This continued evolution of AI technology requires a regulatory framework that fosters flexibility and promotes innovation. Rather than create overly rigid rules that can quickly become obsolete, regulators should work to ensure that electricity generation can keep pace with growing demand in order to fully capture the potential benefits generated by AI-based technologies.