AI and the 2024 Election Part I: State Lawmakers Take Action

This is part of the AI and the 2024 Election Series.

Advances in artificial intelligence (AI) are having a profound impact across America as the technology integrates into nearly all aspects of everyday life, from healthcare to banking. Elections are no exception, and AI is already presenting risks and opportunities related to election administration, cybersecurity, and the information environment.

While the potential impacts of AI on elections are wide ranging, policymakers have focused almost exclusively on harms to the information environment. A rush in 2024 to pass new laws and regulations came out of widespread public concern that AI would be used to accelerate the creation and spread of “deepfakes” and other forms of misinformation about candidates, election officials, and the voting process.

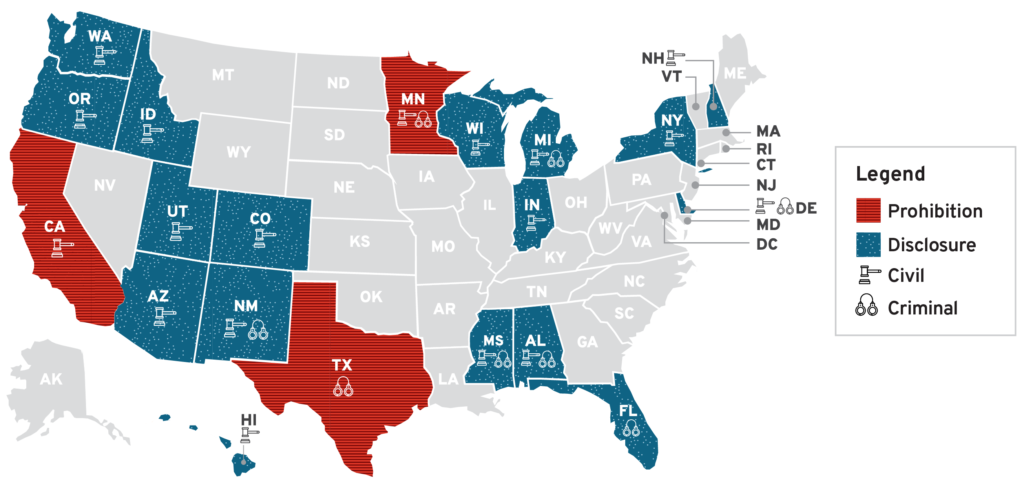

While Congress and various regulatory agencies were unsuccessful in their attempts to crack down on the use of AI in federal election communications, state-level efforts were more effective. As shown on the following map, 20 states now have laws targeting the use of AI to generate deceptive election content. Fifteen were approved in 2024 alone. The specifics vary from state to state, but overall, the most common approach is to pair disclosure requirements with civil penalties.

Legislation Enacted on AI and Elections by Type and Penalty (2019-2024)

Note: Map current as of Nov. 22, 2024

Disclosure Emerges as Leading Approach; Prohibition Faces Constitutional Challenges

Requiring a disclosure when using AI to generate or alter an election communication is the most common state regulatory approach, accounting for 18 of the 20 that have enacted such laws. Disclosures provide transparency about the use of AI so the public can consume the content with the full understanding that the audio, video, or image has been manipulated and does not depict reality. This is a familiar concept, as the Federal Election Commission (FEC) and many states already require campaigns to include various disclosures on certain political advertisements.

Texas and Minnesota prohibit the use of election-related AI-generated deepfakes entirely, but these restrictions stand on shaky legal ground after a recent court decision in California. The law in question, AB 2839, aimed to shift the state’s regulation of AI in elections from a disclosure requirement to general prohibition (with an exception for parody and satire). Approved in September as an emergency measure, the legislation went into effect immediately after Gov. Gavin Newsom signed it into law. A federal court judge soon blocked the law as an unconstitutional speech restriction. While the outcome of the lawsuit is still pending, the concerns outlined in the judge’s order suggest that the prohibition approach imposes significant restrictions on political speech that likely violate the First Amendment.

States Prioritize Enforcement Through Civil Penalties

One of the key policy decisions for states choosing to regulate the use of AI in election communications is whether to treat noncompliance as a criminal or civil violation. This distinction has important implications around who can initiate a legal proceeding against a violation and the types of penalties that can be imposed. Of the 20 states currently regulating the use of AI, 13 rely exclusively on civil penalties for enforcement, five utilize both civil and criminal enforcement, and two utilize criminal enforcement only.

In practice, civil enforcement typically means that the subject of an AI-generated deepfake can seek a court-ordered injunction that prevents additional distribution of the manipulated media. Alabama, Hawai‛i, Minnesota, Mississippi, and Oregon also permit certain government officials—such as the attorney general, secretary of state, or local county attorneys—to seek the injunction. The content creator may also be subject to additional fines. On the other hand, only the government can initiate criminal prosecution, with penalties ranging from fines to prison time.

Narrow Tailoring Helps Prevent Regulation of Innocuous AI Uses

Artificial intelligence is increasingly integrating into daily life, which could lead to unintended consequences if regulations target the technology rather than the uses. That is why all of the regulations around the use of AI in election communications include provisions that narrow the application of the restriction in some manner. Examples include requirements that the AI-enhanced communication be “materially deceptive,” created with the intent to injure a candidate or influence an election outcome, or generated within 60 or 90 days of the election.

These limitations seek to prevent overregulation of innocuous uses of AI that may alter or enhance a video or image without impacting the underlying message or that may lack intent to deceive or harm a political candidate. While prohibition laws in California and Minnesota have already faced legal scrutiny as free speech violations, these narrowing provisions could help disclosure laws withstand future legal challenges. So far, no court decisions have been made to address that issue.

Support for Regulation Is Broad and Bipartisan

Legislatures controlled by both Republicans and Democrats have supported the regulation of AI in elections, and the bills were often approved by wide margins. Among the 20 states that approved AI restrictions, 10 were approved in states controlled by Democrats, eight in states controlled by Republicans, and two—Arizona and Wisconsin—where Republican-controlled legislatures and Democratic governors teamed up to approve legislation. The bills passed with over 90% of the vote from lawmakers in 13 of these states.

While distribution was roughly equal between the two parties, Democrats (who controlled fewer state capitals than Republicans) approved AI restrictions at a much higher rate. This should come as no surprise, as support is generally stronger among Democrats; however, a majority of Americans on both sides of the aisle support regulation.

Louisiana, a state controlled by Republicans, was the only state whose governor vetoed an AI restriction approved by the legislature. In his veto message, Gov. Jeff Landry cited concerns that the laws would restrict political speech and violate the First Amendment.

Conclusion

In the lead-up to the 2024 election, a significant number of states took bipartisan action to regulate the use of AI in election communications. Given constitutional concerns around prohibition, the laws primarily adopted requirements for increased transparency when AI is used in deceptive election communications. This amount of policy change at the state level contrasts with relative inaction in Washington, D.C., which will be discussed in detail in the next post in this series.