Misinformation and Platform Moderation

Introduction

Social media’s rapid rise over the last decade has incited grave concerns among policymakers and the public regarding its role in spreading misinformation. Content moderation—identifying and managing content that violates the terms of service or other policies—is one of the main tools such platforms have to combat misinformation. However, several problems emerge when practiced at scale.

At the core of the moderation problem is the fact that there is no formally accepted definition for the term “misinformation.” A common theme among various definitions is that misinformation is information that runs contrary to or distorts generally accepted facts; however, research shows that this definition quickly breaks down when it comes to more contentious topics. What we consider misinformation depends highly on our individual view of what constitutes truth versus falsehood. Because platforms are unable to satisfy each individual perspective, it is likely there will always be some form of misinformation on social media.

This leaves platforms in a difficult position as final arbiters of seemingly limitless content. At this scale, it is impossible for humans to review every post manually, meaning automated decisions must be employed at the system level—something courts have recognized as a uniquely digital problem. Both these systems and human review inevitably make mistakes that lead to faulty moderation decisions, meaning platforms will inevitably make bad decisions when choosing whether to moderate content.

Given the controversy over COVID-19 misinformation, platforms are trying to minimize the amount of content they moderate while maximizing user awareness. If preserving content represents one end of the spectrum and removing it represents the other, the emergence of Community Notes represents a middle-ground approach that provides important context or refutation alongside questionable content rather than removing it.

The Emergence of Collaborative Context

In January 2021, Twitter (now X) unveiled “Birdwatch”—which would eventually become Community Notes—in response to concerns around content moderation. The foundation of this system resides in its crowd-sourced approach: When an automated system or individual user identifies a post as potentially misleading, eligible users can contribute contextual information to it. While the barrier to contribution is intentionally low to encourage broad participation, the system employs rigorous internal validation processes that often include a probationary period for new contributors. This multi-layered approach ensures that only consistently high quality notes that achieve broad consensus are displayed.

A critical element of the Community Notes model is its emphasis on cross-viewpoint consensus. Notes are published only when contributors representing a diverse range of perspectives broadly agree on their helpfulness. This “bridge-based” ranking system—which identifies agreement between individuals who typically hold differing opinions—is instrumental in mitigating partisan bias and fostering more universally accepted contextual information. A continuous feedback loop, wherein users rate notes as either helpful or unhelpful, further informs the system’s ongoing assessment of contributor reliability and note quality.

Early research on Community Notes shows its efficacy in generating high-quality information. One study of more than 45,000 notes determined that up to 97 percent were “entirely accurate,” with roughly 90 percent relying on moderately to highly credible sources. Due in part to its bridging function and rating systems, Community Notes is able to produce high-quality information agreed upon by a broad political spectrum. Another study found that notes on inaccurate posts reduced “retweets” (re-postings of original content to individual users’ feeds) by half and increased the probability of the author deleting the original post by up to 80 percent—findings confirmed by research from Twitter itself.

In terms of bias, a study of German-language notes found “no clear political orientation” among helpful Community Notes and determined that the bridging algorithm “ensures a certain numerical balance between parties to the left and right of the cent[er].” One survey found that these notes increase user trust in the accuracy of online information. Most research to date supports the fact that Community Notes is a highly effective and trustworthy system for notating posts without requiring deletion or censorship; however, it has limitations as well (as discussed later on in this piece).

A Proposed Framework for Industry Collaboration

The demonstrated efficacy and growing popularity of Community Notes suggest that its underlying value could extend across the entire social media industry, transcending its status as an isolated feature of a single platform. Given that its source code and data are entirely open source, other major platforms have already expressed interest in (or begun implementing) similar features. Examples include Meta, which has explicitly adopted X’s open-source algorithm as a foundational element for its own Community Notes feature, and TikTok, which recently launched a bridge-based ranking system called “Footnotes.”

While these platforms will naturally adapt the system to their unique environments, this widespread interest underscores a critical opportunity for industry-wide collaboration. The core functionalities of these systems—including network analysis, consensus requirements, ratings, and bridging algorithms—are modular enough to be integrated into virtually any social media network.

To leverage this collective potential and cultivate a more robust, industry-wide solution to misinformation, this Real Solutions piece proposes establishing an independent nonprofit institution called the Community Notes Industry Center (CNIC). The CNIC would develop, maintain, and openly disseminate transparent, community-driven tools for contextualizing online information, becoming the trusted, neutral steward of a universally adopted open-source framework that empowers users to collaboratively identify and address misinformation, thereby safeguarding free expression and promoting civic discourse across all digital platforms.

Structurally, the institution’s nonprofit status would enhance its perceived neutrality by underscoring its commitment to public benefit rather than commercial gain. Funding would be secured through a transparent consortium model, with contributions from participating social media platforms potentially complemented by philanthropic grants and research funding but completely independent from public funding to avoid policy conflicts and constitutional concerns.

A multi-stakeholder board, essential for ensuring true independence and broad representation, would oversee governance. Ideally, it would comprise independent academics specializing in media studies and information science, civil liberties and free speech advocates, representatives from contributing technology companies, experts in open-source software development, and public interest representatives. Such a diverse composition would actively work to prevent undue influence from any single participant.

The CNIC’s core functions would encompass the custodianship and continuous improvement of the central algorithm and its associated software, ensuring its open, transparent, and accessible nature for all platforms. It would also foster a vibrant community of developers, researchers, and contributors from various backgrounds to enhance the system collectively by managing contributions, code reviews, and comprehensive documentation. Furthermore, the institution would conduct and sponsor independent research into the efficacy of Notes-style systems, identifying vulnerabilities and developing best practices for implementation while working toward a standardized, interoperable framework that allows seamless integration across diverse social media platforms.

Benefits

Policy Legitimacy

This proposed model offers several compelling advantages over fragmented, proprietary approaches. Centralizing the development and oversight of core functions within an independent entity would significantly enhance public trust in the mechanisms used to address misinformation. The standards created in Community Notes could act similarly to rating institutions for other media like Entertainment Software Ratings Board, the Movie Picture Association, and the Comics Code Authority. While the CNIC does not have a correlative rating system for content, it represents the same model of industry collaboration to give users more information about a product before they engage with it.

While the Community Notes model is not a panacea for misinformation, there is a high degree of political pressure for the social media industry to signify its commitment to combating the problem—and a formalized institution with open-source code and data could relieve this pressure. While each firm will be able to customize moderation choices and results, a central location for the majority of the code and data would represent a substantial improvement in transparency.

Interoperability

Greater collaboration could result in other technical gains, as well. For example, a process might be established wherein notes are created collaboratively and customized by each platform:

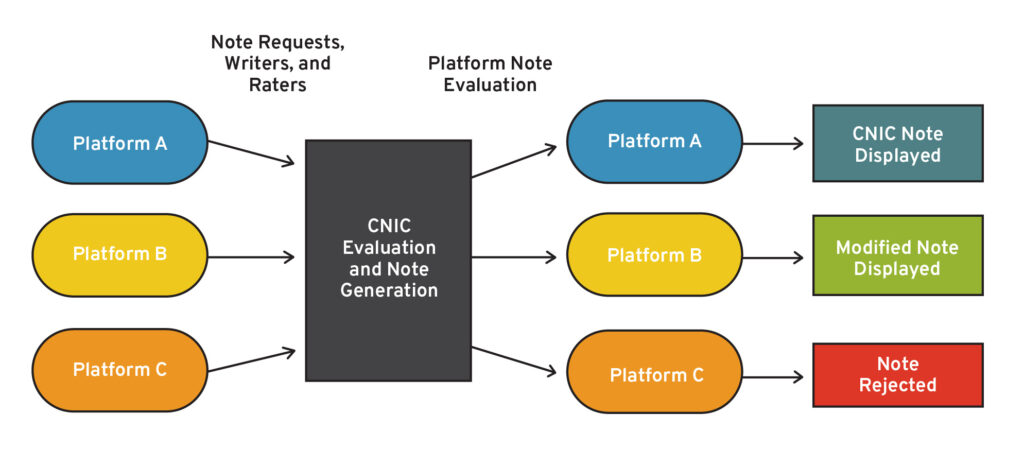

Such a process would permit the CNIC to leverage requests, writers, and raters from multiple platforms while allowing each platform to maintain full control over the result. This interoperability would allow notes to be identified, created, and shared seamlessly across participating platforms, which would significantly accelerate the spread of accurate information and contextual understanding to counter misinformation in real time.

Importantly, because each firm could customize their implementation of the tool, the CNIC would not act as true middleware in which the platforms themselves are passive. For example, firms could increase the threshold for notes so that they appear less often and flagged content is moderated more quickly. They could also adjust topics, style, or the note itself before pushing it to the platform. This is crucial because, despite the model being open source, each firm must maintain full control over the content appearing on their platform.

Combating Bias/Capture

One criticism of the notes system is that it is possible for social platforms to become popular among users with certain ideological or political interests. For example, although there is no data on X’s actual political orientation, a June 2025 Pew survey found that 55 percent of self-identifying Democrat and Democrat-leaning individuals said they think X “supports the views of conservatives.”

An interoperable system could help prevent echo chambers from developing on any given platform by ensuring that notes from other platforms are shown. Additionally, because the process requires ideological agreement before a note is published, platforms could leverage writers and raters from other platforms to review cross-platform notes. This would further enhance and legitimize the process and show that platforms are committed to dealing with misinformation.

Advancing AI

Furthermore, advancements in artificial intelligence (AI) hold considerable promise for the rapid identification and labeling of AI-generated videos or images. While the continuous evolution of generative AI necessitates ongoing research and development to maintain efficacy, it might be used to develop simulated networks and AI agents to act as notes contributors. These agents could be trained to represent opposing viewpoints and craft a statement that each side finds agreeable.

In a sense, X is already experimenting with a hybrid model that allows users to tag the system’s built-in AI, “Grok,” which responds to queries with context and note-like information. Although Grok is not programmed to act as a note contributor, an industry-led team could focus their efforts on honing these abilities while maintaining transparency.

Conclusion

Online misinformation presents undeniable challenges, and the public’s concern is well founded. However, the path forward should not be paved with broad governmental mandates that risk stifling innovation and legitimate discourse. The viral dynamic of misinformation can be met with equally viral, decentralized, and collaborative solutions.

An industry-led, open-source “notes” repository stewarded by an independent nonprofit and coupled with strategic partnerships and a role as communications intermediary represents a pragmatic, market-oriented approach. This framework leverages the power of collective intelligence to combat misinformation while upholding the fundamental principles of free speech and an open internet. It offers a more flexible and adaptable model than rigid government regulations, fostering a shared responsibility within the industry and empowering users with the tools and information needed to navigate the complexities of the digital age. We urge industry leaders, policymakers, and civil society organizations to collaboratively explore and invest in this proposed framework to transform a promising innovation into a foundational pillar for a more trustworthy online environment.